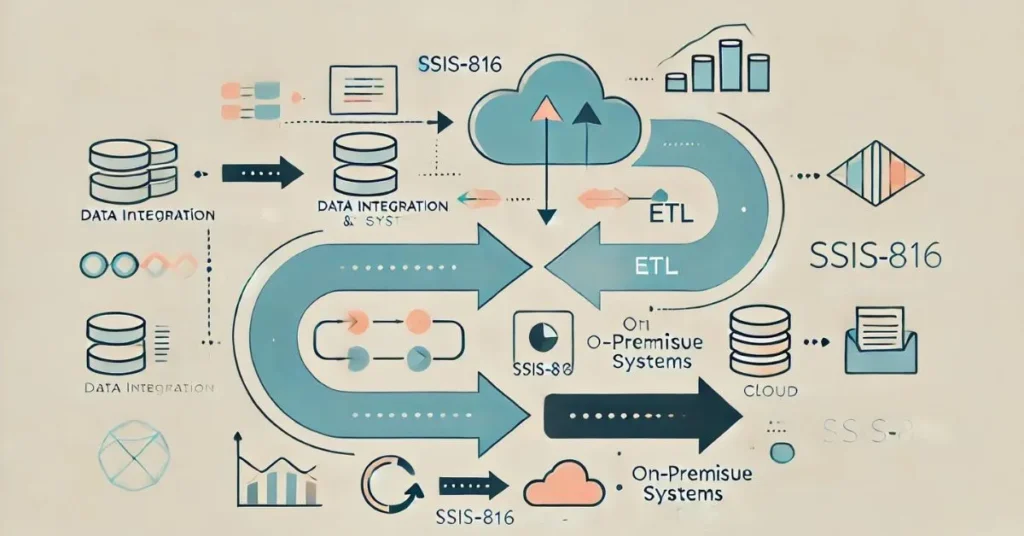

Efficient data management is critical in today’s data-driven world. ETL (Extract, Transform, Load) processes are at the core of managing and transforming data. One of the popular tools for this is SQL Server Integration Services (SSIS).

SSIS-816 is an advanced version of SSIS, designed to enhance data integration. It offers new features and improvements to streamline ETL workflows. Whether you’re dealing with large datasets or complex transformations, SSIS-816 can make your work easier.

In this post, we’ll explore what SSIS-816 is and how it differs from standard SSIS. We’ll look at its key features and the benefits it provides. You will learn how it can help optimize data pipelines and improve the overall performance of your ETL processes.

SSIS-816 is particularly useful for professionals managing data across different platforms. It helps integrate on-premise databases with cloud-based services. This makes it ideal for hybrid data environments where seamless data flow is essential.

If you are already familiar with SSIS, you will find SSIS-816 a valuable upgrade. It offers better performance, scalability, and security. Moreover, its enhanced data transformation capabilities are designed to handle more complex data flows.

This blog post will guide you through everything you need to know about SSIS-816. We’ll cover the basics, its key features, and practical ways to implement it. Whether you are a data engineer or a business intelligence developer, this post will provide insights into how SSIS-816 can help improve your data processes.

Understanding the Basics of This Tool

Overview of SSIS Architecture

SQL Server Integration Services (SSIS) is a tool used for data integration. It helps in extracting data from various sources, transforming it, and loading it into a destination. SSIS is commonly used in data warehousing and big data processing.

The architecture consists of three main components: control flow, data flow, and connection managers. Control flow defines the order in which tasks are executed. Data flow deals with how data is transformed and moved between sources and destinations.

Connection managers handle the connection to various data sources and systems. This tool allows you to work with a wide range of data types, making it versatile. Understanding these core components is essential before diving into the advanced version.

What Sets This Advanced Version Apart?

This version builds upon the foundation of standard SSIS. It includes new features that enhance the performance of ETL processes. These enhancements are especially useful for complex data workflows and large datasets.

One key difference is the improved data transformation capabilities. The tool also offers better integration with cloud-based services like Azure. This makes it ideal for hybrid environments where both on-premise and cloud data are used.

Additionally, it focuses on optimizing the performance of ETL tasks. It provides features like parallel execution and memory management. These improvements help in handling bigger and more complex data flows.

Use Cases and Applications of the Tool

The tool is used in many industries to manage data efficiently. It is ideal for businesses that rely on large-scale data integration. For example, it can be used in financial services to process transaction data from multiple sources.

It is also popular in e-commerce for integrating customer data from different platforms. Healthcare organizations use the tool to manage patient records and improve data accuracy. The versatility of the tool makes it suitable for any data-driven industry.

Key Features of This ETL Tool

Enhanced Data Transformation Capabilities

One of the main strengths of this version is its ability to handle complex data transformations. It introduces advanced transformation tasks that are not available in the standard version. This allows for more flexibility and control over how data is processed.

With these enhancements, you can create custom data flows that meet specific business needs. This is especially useful when dealing with large datasets or performing multiple transformations in one pipeline. It helps make ETL workflows more efficient and easier to manage.

Cloud Integration and Hybrid ETL Workflows

The tool supports seamless integration with cloud platforms like Azure and AWS. This is particularly useful for businesses that operate in both on-premise and cloud environments. It simplifies the process of moving data between local servers and cloud services.

Hybrid ETL workflows are becoming more common as businesses shift to the cloud. This version allows for real-time data synchronization between cloud and on-premise systems. This ensures that data is always up-to-date across all platforms.

Performance and Scalability Features

This tool is designed with performance in mind. It includes features like parallel execution, which allows multiple tasks to run at the same time. This significantly speeds up the ETL process, especially when dealing with large volumes of data.

Scalability is another important aspect of the tool. It can handle large datasets without slowing down or crashing. This makes it suitable for businesses that expect their data needs to grow over time.

Security Enhancements

Security is a top priority when working with sensitive data. The tool comes with enhanced security features to protect data during the ETL process. It supports encryption, authentication, and access control.

These security features ensure that only authorized users can access or modify the data. This is important for industries that deal with sensitive information, like healthcare or finance. With this tool, you can be confident that your data is secure throughout the workflow.

Step-by-Step Guide to Implementing the Tool

Prerequisites and Setup

Before you start using the tool, there are a few prerequisites. You need to have SQL Server installed on your system. Additionally, you should have Visual Studio or SQL Server Data Tools (SSDT) for creating and managing your packages.

Make sure that your system meets the necessary hardware and software requirements. You will also need the components, which can be downloaded and installed separately. Once installed, you are ready to start building your ETL workflows.

Creating an ETL Project

To create a new project, open Visual Studio or SSDT. Select the option to create a new Integration Services project. Name your project and choose a location to save it.

Next, you’ll need to configure the connection managers. These define where the data will come from and where it will go. You can connect to databases, flat files, or cloud services, depending on your project needs.

Building Your First ETL Package

Start by creating a new ETL package within your project. Add tasks to the control flow, such as data extraction from a source or a transformation task. Each task in the control flow will define a step in your ETL process.

Move on to the data flow to define how data is transformed as it moves from source to destination. The tool provides advanced transformations that can be added here. Once you have configured the tasks, validate and test your package to ensure it runs correctly.

Deploying Packages to a Server

Once your package is ready, you can deploy it to a SQL Server or a cloud service. The tool allows for flexible deployment options, depending on where your data is stored. Use SQL Server Management Studio or a similar tool to manage deployment.

You can also schedule your package to run automatically using SQL Server Agent. This helps automate the ETL process, ensuring that data is updated regularly without manual intervention. Make sure to monitor the package for any errors during execution.

Optimizing the Tool for Performance

Best Practices for Performance Tuning

Performance tuning is essential for ensuring that your packages run efficiently. One of the best practices is to optimize buffer sizes. This ensures that your data flows faster through the pipeline.

Another tip is to avoid using blocking transformations where possible. These types of transformations slow down the process because they stop the data flow until all records are processed. Non-blocking transformations keep the data moving continuously, improving speed.

Parallelism and Data Partitioning

The tool allows you to run multiple tasks in parallel. This can significantly improve the performance of your ETL packages. By configuring parallel execution, you can reduce the time it takes to process large datasets.

Data partitioning is another useful technique for handling large amounts of data. It splits the data into smaller chunks, allowing the tool to process each chunk faster. This helps in managing and scaling up for bigger projects.

Error Handling and Debugging

Proper error handling is critical for smooth ETL operations. You can set up error outputs for data flow tasks. These outputs capture any rows that cause errors during transformations.

Logging is also an important part of debugging. The tool offers detailed logging options to help you track package execution and identify where issues occur. By using logs, you can troubleshoot problems quickly and keep your data flowing smoothly.

Scalability Tips

Scalability is important if your data needs are growing. The tool is built to handle large-scale ETL operations, but there are a few steps you can take to maximize its scalability. One tip is to use data flow parallelism to process large datasets more efficiently.

Another tip is to adjust your package configurations as your data volume increases. This includes fine-tuning buffer sizes and batch settings to handle larger data loads. Cloud integration also allows you to scale your data operations easily by utilizing cloud resources.

Common Challenges and How to Overcome Them

Integration Issues with Legacy Systems

Integrating the tool with older, legacy systems can be challenging. These systems may not support modern data formats or connections. To overcome this, use built-in connectors or third-party adapters that can bridge the gap between new and old systems.

Another challenge is data compatibility between legacy systems and newer databases. You may need to perform data conversions to ensure compatibility. The tool provides tools for data transformation that make this process easier.

Data Quality and Validation

Maintaining data quality is a common problem in ETL workflows. Poor data quality can lead to incorrect results and unreliable analytics. To address this, use data profiling and validation features.

These features help you check for missing values, duplicates, and inconsistencies in your data. Implementing data validation rules at the start of your ETL process ensures that only clean, accurate data moves through the pipeline. This reduces errors downstream and improves the reliability of your data.

Security and Compliance Pitfalls

Ensuring data security during ETL processes is essential, especially when handling sensitive information. The tool includes encryption and secure connection options to protect data in transit. You can also implement role-based access controls to limit who can access the data.

Compliance with industry standards, such as GDPR or HIPAA, can be tricky. The tool helps by supporting secure logging and auditing, ensuring that your data processes meet regulatory requirements. Regularly review your security settings to stay compliant with evolving standards.

Advanced Techniques in the Tool

Real-Time Data Processing

The tool allows you to process data in real-time, which is useful for businesses that need up-to-the-minute data. This is achieved by integrating with real-time data sources like IoT devices or streaming services. Real-time ETL helps keep your data updated without delays, making it valuable for industries like finance or retail.

Using data flow capabilities, you can set up continuous data processing pipelines. This ensures that data is always available for analysis. Real-time processing also improves decision-making by providing the latest information.

Custom Scripting and .NET Integration

One of the strengths of the tool is its ability to integrate custom scripts written in .NET or C#. This allows you to extend the functionality of your ETL packages. You can create custom transformations or tasks that are not available in the standard SSIS toolbox.

For example, you can write a script to handle complex data calculations or automate repetitive tasks. The tool makes it easy to embed these scripts into your data flow. This flexibility allows you to customize your ETL process to fit your specific needs.

Integrating with Machine Learning Workflows

The tool can also be integrated with machine learning models to enhance data processing. You can use it to prepare data for training machine learning algorithms. Once the model is trained, the tool can automate the deployment and scoring of new data.

For example, you can integrate with platforms like Azure Machine Learning to run predictions on your data. This combination of ETL and machine learning allows for more advanced data workflows. It is especially useful for businesses looking to gain insights through predictive analytics.

Monitoring, Logging, and Maintenance

Monitoring Workflows

Monitoring your packages is crucial to ensure they run smoothly. The tool provides built-in tools to track package performance and execution. By setting up real-time monitoring, you can quickly detect and fix issues before they affect your ETL process.

You can also use SQL Server Management Studio (SSMS) or third-party tools for advanced monitoring. These tools give detailed insights into package execution times and resource usage. This helps optimize your workflows and prevent potential failures.

Logging Best Practices

Effective logging helps you track what happens during the ETL process. The tool supports detailed logging, allowing you to capture events like errors, warnings, and task completion. Proper logging helps with troubleshooting and provides a history of package execution.

To implement logging, use the log providers or custom logging solutions. You can store logs in text files, SQL Server databases, or even cloud storage. This makes it easy to analyze performance and resolve issues quickly.

Maintenance and Upgrades

Regular maintenance is essential to keep your packages running efficiently. This includes checking for updates, optimizing performance settings, and reviewing logs for potential issues. Scheduling routine maintenance helps avoid unexpected downtime.

Upgrading packages is also important as your data needs grow. Make sure to test new versions in a staging environment before deploying them to production. This ensures smooth transitions and minimizes the risk of errors during upgrades.

Conclusion

Recap Key Points

The tool is a powerful solution that enhances traditional SSIS workflows. It offers improved performance, cloud integration, and advanced data transformation features. These features make it ideal for handling complex and large-scale ETL processes.

We’ve explored the key aspects of this tool, including its setup, features, and optimization techniques. It also provides tools for real-time data processing, custom scripting, and machine learning integration. This tool is designed to help data professionals streamline and optimize their workflows.

Call to Action

Now that you have a deeper understanding of this tool, it’s time to put it into practice. Whether you’re upgrading from standard SSIS or starting from scratch, this version will help improve your ETL processes. Experiment with the features and techniques discussed in this guide to optimize your data operations.

Engagement and Feedback

We encourage you to try this tool in your data projects and share your experiences. If you have any questions or challenges, feel free to leave a comment or reach out for help. Your feedback will help others in the data community as well!

FAQs

1. What is SSIS-816, and how is it different from standard SSIS?

SSIS-816 is an advanced version of SQL Server Integration Services (SSIS), designed to improve data integration and ETL processes. It offers enhanced features such as advanced data transformations, better cloud integration, and optimized performance compared to the standard SSIS.

2. What are the key features of SSIS-816?

Key features include:

- Enhanced data transformation capabilities

- Cloud integration for hybrid ETL workflows

- Improved performance through parallel execution

- Scalability for handling large datasets

- Advanced security features like encryption and access control

3. What are the system requirements for installing SSIS-816?

To use SSIS-816, you need SQL Server installed, along with Visual Studio or SQL Server Data Tools (SSDT). Make sure your system meets the necessary hardware and software requirements, such as sufficient memory and processing power for handling large data flows.

4. Can I use SSIS-816 to integrate on-premise data with cloud platforms?

Yes, SSIS-816 offers seamless integration with cloud platforms like Azure and AWS. It allows for hybrid ETL workflows where data is moved between on-premise systems and cloud services in real-time.

5. What are some common use cases for SSIS-816?

SSIS-816 is commonly used in industries like finance, e-commerce, and healthcare for managing large-scale data integration tasks. It is ideal for processing transaction data, integrating customer information, and managing complex workflows that span across cloud and on-premise environments.

6. How does SSIS-816 improve performance compared to standard SSIS?

SSIS-816 improves performance through parallel task execution, optimized memory management, and efficient data flow handling. These features help reduce processing times for large datasets and complex ETL workflows.

7. Is SSIS-816 suitable for real-time data processing?

Yes, SSIS-816 supports real-time data processing by integrating with real-time data sources such as IoT devices or streaming services. This ensures that your data is always up-to-date and available for analysis in real-time.

8. Can I write custom scripts in SSIS-816?

Yes, SSIS-816 allows you to integrate custom scripts using .NET or C#. You can write custom tasks or transformations to meet specific data processing requirements that are not available in the standard SSIS toolbox.

9. What are the security features in SSIS-816?

SSIS-816 provides advanced security features such as data encryption, authentication, and role-based access controls. These features ensure that sensitive data is protected during ETL processes and only authorized users can access the data.

10. How can I optimize SSIS-816 for large-scale data processing?

To optimize SSIS-816 for large-scale data processing, you can:

- Use parallel execution to run multiple tasks at the same time

- Implement data partitioning to break large datasets into smaller chunks

- Adjust buffer sizes and memory settings for better performance

- Monitor and log your packages to identify performance bottlenecks